📝 AI CONCERN • AI CERN • BLOOMBERG GPT • GPT PLUGINS | V23

Can progress be too fast?

📝 CONCERN ABOUT AI

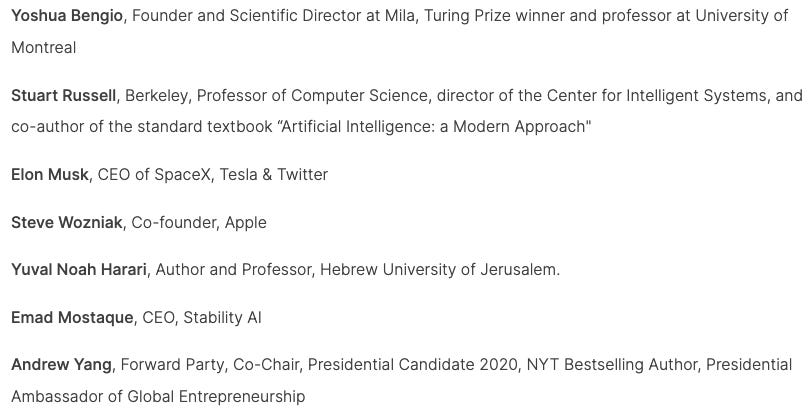

Is AI development reaching a pace that we can’t control? The Future of Life Institute certainly thinks so and published an open letter calling “on all AI labs to immediately pause for at least 6 months the training of AI systems more powerful than GPT-4“

The open letter has already gathered over 10.000 signatures, including some pretty big names from the world of AI, Tech and beyond. You can see the full list here.

It is becoming obvious that we are developing a very powerful technology that needs to be handled with extreme care – whether a forced pause is a good idea is still an open discussion, though. This is an extremely hard question with no apparent right or wrong answer.

Arguing against the 6 month pause, two other AI legends, the co-founder of Google Brain, Andrew Ng and Meta’s Chief AI Scientist, Yann LeCun, will be giving a talk tomorrow, April the 7th.

Sam Altman, the CEO of OpenAI, also made an interesting argument on Lex Fridman’s podcast. In essence, he posed the question if it would be safer to have superintelligent AI takeoff now and in a comparatively slow way or later in a much faster way. What do you think?

For some more context on the development of these kinds of systems – Kara Swisher (who is known to be an outspoken sceptic when it comes to hyped technologies) had a really good and nuanced conversation with Sam Altman on her podcast:

🔭 A CERN FOR AI

All major AI research is happening behind closed doors inside big commercial enterprises like OpenAI, Google or Facebook at the moment.

LAION (the Large-scale Artificial Intelligence Open Network) has launched a petition proposing a publicly and internationally funded supercomputing facility to train open-source foundation models (large models like GPT-4 that can be used as the foundation for endless other applications) in an effort to openly share the power and potential of AI beyond a couple of big companies.

LION is proposing a model similar to that of CERN, the largest particle physics lab in the world, which is operated by a group of 23 countries. CERN has helped drive human progress through big contributions to advanced technology and the world of physics. Fun Fact: it’s also the birthplace of the World Wide Web.

Imagine what an AI lab on that scale could unlock?

🏦 BLOOMBERG GPT

Bloomberg, the financial news company, announced that they trained a specialised LLM (Large Language Model) for the financial domain.

The model, called BloombergGPT is outperforming similar models in the finance domain ‘by large margins‘, whilst also keeping up with others on more general tasks.

To train the model, Bloomberg combined public data with their treasure trove of forty years of financial documents. This is a great example, showing the value of proprietary data moving forward. Almost paradoxically, old companies, with a lot of historical data on their domain could have an advantage over younger startups (if they have the means to use technologies like LLMs that is…)

I’m very curious about how this model will find its way into existing Bloomberg products and what users will do with it.

🔌 GPT PLUGINS

↗ OpenAI

One of the huge limitations of ChatGPT used to be that it was isolated from the internet and given that it’s been trained on data that ends sometime in 2021, its usefulness in everyday situations was pretty limited.

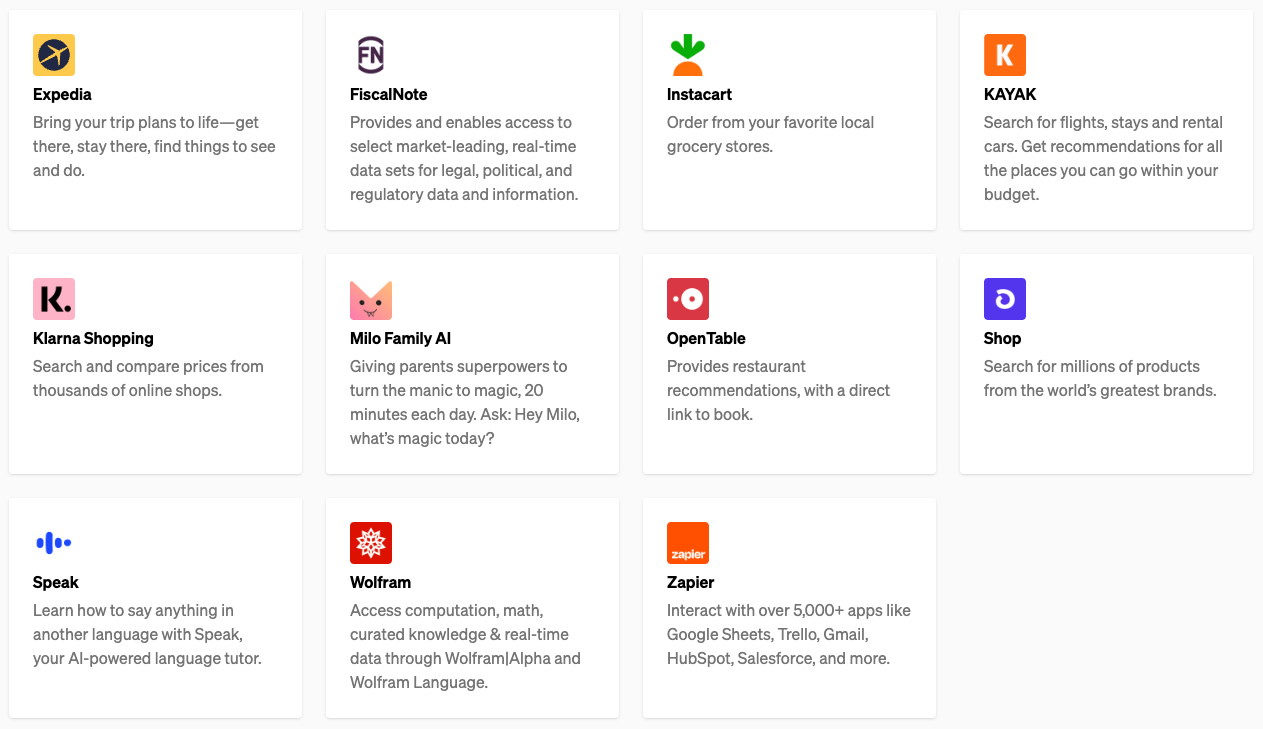

This is changing. OpenAI announced ChatGPT Plugins, which allow ChatGPT to interact with other applications. Here are some early examples:

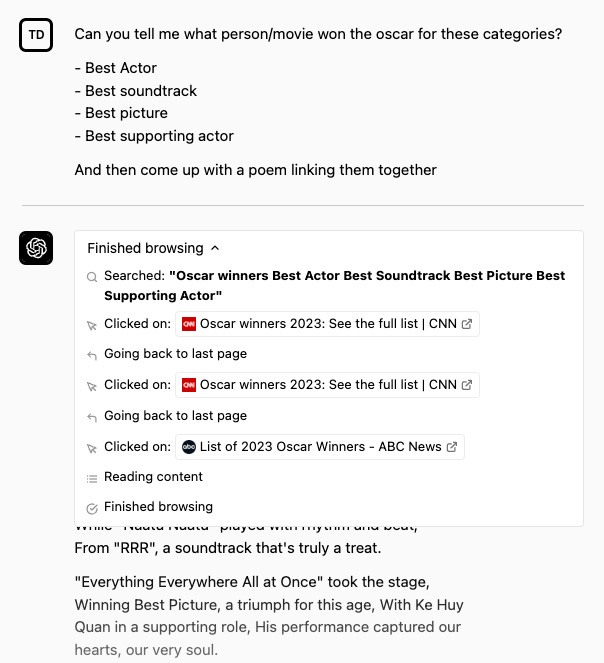

OpenAI also released two Plugins themselves a web browser and code interpreter. All these plugins can be used by ChatGPT to get information for its responses. Here is an example how it would use the web browser:

The release of ChatGPT felt like the iPhone moment for AI – a simple and intuitive way to interact with a new technology. The launch of ChatGPT Plugins feels like the App Store moment – the birth of a new marketplace opening up this technology to countless creators.

Getting AI models to interact with other technologies as well as with other models can make them extremely useful in our everyday life. But, to circle back the the open letter at the beginning, it also has a lot of potential risks as AI can interact with more and more things around us. Something called the control problem.

As DeepMind Founder Demis Hassabis puts it: AI developers have to be "bold and responsible".

The Input is a newsletter made with 🖤 by Nice Outside on planet earth. If you have feedback, are interested in geeking out about any of the things mentioned above or just want to jam on an idea, feel free to reach out to max@no.studio.